Outline History of Nuclear Energy

- The science of atomic radiation, atomic change and nuclear fission was developed from 1895 to 1945, much of it in the last six of those years.

- Over 1939-45, most development was focused on the atomic bomb.

- From 1945 attention was given to harnessing this energy in a controlled fashion for naval propulsion and for making electricity.

- Since 1956 the prime focus has been on the technological evolution of reliable nuclear power plants.

Exploring the nature of the atom

Uranium was discovered in 1789 by Martin Klaproth, a German chemist, and named after the planet Uranus.

Ionising radiation was discovered by Wilhelm Rontgen in 1895, by passing an electric current through an evacuated glass tube and producing continuous X-rays. Then in 1896 Henri Becquerel found that pitchblende (an ore containing radium and uranium) caused a photographic plate to darken. He went on to demonstrate that this was due to beta radiation (electrons) and alpha particles (helium nuclei) being emitted. Villard found a third type of radiation from pitchblende: gamma rays, which were much the same as X-rays. Then in 1896 Pierre and Marie Curie gave the name 'radioactivity' to this phenomenon, and in 1898 isolated polonium and radium from the pitchblende. Radium was later used in medical treatment. In 1898 Samuel Prescott showed that radiation destroyed bacteria in food.

In 1902 Ernest Rutherford showed that radioactivity, as a spontaneous event emitting an alpha or beta particle from the nucleus, created a different element. He went on to develop a fuller understanding of atoms and in 1919 he fired alpha particles from a radium source into nitrogen and found that nuclear rearrangement was occurring, with formation of oxygen. Niels Bohr was another scientist who advanced our understanding of the atom and the way electrons were arranged around its nucleus through to the 1940s.

By 1911 Frederick Soddy discovered that naturally-radioactive elements had a number of different isotopes (radionuclides), with the same chemistry. Also in 1911, George de Hevesy showed that such radionuclides were invaluable as tracers, because minute amounts could readily be detected with simple instruments.

In 1932 James Chadwick discovered the neutron. Also in 1932 Cockcroft and Walton produced nuclear transformations by bombarding atoms with accelerated protons, then in 1934 Irene Curie and Frederic Joliot found that some such transformations created artificial radionuclides. The next year Enrico Fermi found that a much greater variety of artificial radionuclides could be formed when neutrons were used instead of protons.

Fermi continued his experiments, mostly producing heavier elements from his targets, but also, with uranium, some much lighter ones. At the end of 1938 Otto Hahn and Fritz Strassmann in Berlin showed that the new lighter elements were barium and others which were about half the mass of uranium, thereby demonstrating that atomic fission had occurred. Lise Meitner and her nephew Otto Frisch, working under Niels Bohr, then explained this by suggesting that the neutron was captured by the nucleus, causing severe vibration leading to the nucleus splitting into two not quite equal parts. They calculated the energy release from this fission as about 200 million electron volts. Frisch then confirmed this figure experimentally in January 1939.

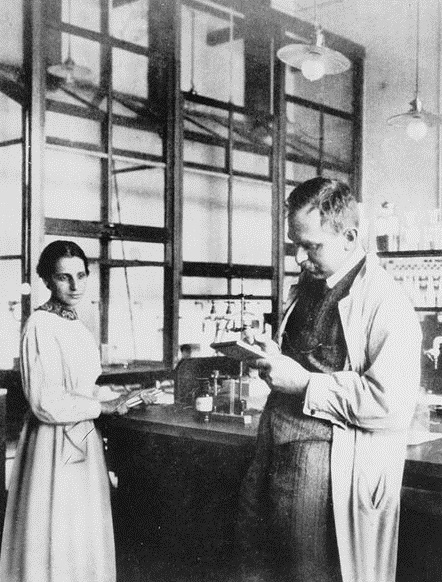

Lise Meitner and Otto Hahn, c. 1913

This was the first experimental confirmation of Albert Einstein's paper putting forward the equivalence between mass and energy, which had been published in 1905.

Harnessing nuclear fission

These 1939 developments sparked activity in many laboratories. Hahn and Strassmann showed that fission not only released a lot of energy, but that it also released additional neutrons which could cause fission in other uranium nuclei and possibly a self-sustaining chain reaction leading to an enormous release of energy. This suggestion was soon confirmed experimentally by Joliot and his co-workers in Paris, and Leo Szilard working with Fermi in New York.

Bohr soon proposed that fission was much more likely to occur in the uranium-235 isotope than in U-238 and that fission would occur more effectively with slow-moving neutrons than with fast neutrons. The latter point was confirmed by Szilard and Fermi, who proposed using a 'moderator' to slow down the emitted neutrons. Bohr and Wheeler extended these ideas into what became the classical analysis of the fission process, and their paper was published only two days before war broke out in 1939.

Another important factor was that U-235 was then known to comprise only 0.7% of natural uranium, with the other 99.3% being U-238, with similar chemical properties. Hence the separation of the two to obtain pure U-235 would be difficult and would require the use of their very slightly different physical properties. This increase in the proportion of the U-235 isotope became known as 'enrichment'.

The remaining piece of the fission/atomic bomb concept was provided in 1939 by Francis Perrin who introduced the concept of the critical mass of uranium required to produce a self-sustaining release of energy. His theories were extended by Rudolf Peierls at Birmingham University and the resulting calculations were of considerable importance in the development of the atomic bomb. Perrin's group in Paris continued their studies and demonstrated that a chain reaction could be sustained in a uranium-water mixture (the water being used to slow down the neutrons) provided external neutrons were injected into the system. They also demonstrated the idea of introducing neutron-absorbing material to limit the multiplication of neutrons and thus control the nuclear reaction (which is the basis for the operation of a nuclear power station).

Peierls had been a student of Werner Heisenberg, who from April 1939 presided over the German nuclear energy project under the German Ordnance Office. Initially this was directed towards military applications, and by the end of 1939 Heisenberg had calculated that nuclear fission chain reactions might be possible. When slowed down and controlled in a 'uranium machine' (nuclear reactor), these chain reactions could generate energy; when uncontrolled, they would lead to a nuclear explosion many times more powerful than a conventional explosion. It was suggested that natural uranium could be used in a uranium machine, with heavy water moderator (from Norway), but it appears that researchers were unaware of delayed neutrons which would enable a nuclear reactor to be controlled. Heisenberg noted that they could use pure uranium-235, a rare isotope, as an explosive, but he apparently believed that the critical mass required was higher than was practical.

In the summer of 1940, Carl Friedrich von Weizsäcker, a younger colleague and friend of Heisenberg's, drew upon publications by scholars working in Britain, Denmark, France, and the USA to conclude that if a uranium machine could sustain a chain reaction, then some of the more common uranium-238 would be transmuted into 'element 94', now called plutonium. Like uranium-235, element 94 would be an incredibly powerful explosive. In 1941, von Weizsäcker went so far as to submit a patent application for using a uranium machine to manufacture this new radioactive element.

By 1942 the military objective was wound down as impractical, requiring more resources than available. The priority became building rockets. However, the existence of the German Uranverein project provided the main incentive for wartime development of the atomic bomb by Britain and the USA.

Nuclear physics in Russia

Russian nuclear physics predates the Bolshevik Revolution by more than a decade. Work on radioactive minerals found in central Asia began in 1900 and the St Petersburg Academy of Sciences began a large-scale investigation in 1909. The 1917 Revolution gave a boost to scientific research and over 10 physics institutes were established in major Russian towns, particularly St Petersburg, in the years which followed. In the 1920s and early 1930s many prominent Russian physicists worked abroad, encouraged by the new regime initially as the best way to raise the level of expertise quickly. These included Kirill Sinelnikov, Pyotr Kapitsa and Vladimir Vernadsky.

By the early 1930s there were several research centres specialising in nuclear physics. Kirill Sinelnikov returned from Cambridge in 1931 to organise a department at the Ukrainian Institute of Physics and Technology (later renamed Kharkov Institute of Physics and Technology, KIPT) in Kharkov, which had been set up in 1928. Academician Abram Ioffe formed another group at the Leningrad Physics and Technical Institute (FTI), later becoming independent as the Ioffe Institute, including the young Igor Kurchatov. Ioffe was its first director, through to 1950.

By the end of the decade, there were cyclotrons installed at the Radium Institute and Leningrad FTI (the biggest in Europe). But by this time many scientists were beginning to fall victim to Stalin's purges – half the staff of Kharkov Institute, for instance, was arrested in 1939. Nevertheless, 1940 saw great advances being made in the understanding of nuclear fission including the possibility of a chain reaction. At the urging of Kurchatov and his colleagues, the Academy of Sciences set up a "Committee for the Problem of Uranium" in June 1940 chaired by Vitaly Khlopin, and a fund was established to investigate the central Asian uranium deposits. The Radium Institute had a factory in Tartarstan used by Khlopin to produce Russia’s first high-purity radium. Germany's invasion of Russia in 1941 turned much of this fundamental research to potential military applications.

Conceiving the atomic bomb

British scientists had kept pressure on their government. The refugee physicists Peierls and Frisch (who had stayed in England with Peierls after the outbreak of war), gave a major impetus to the concept of the atomic bomb in a three-page document known as the Frisch-Peierls Memorandum. In this they predicted that an amount of about 5kg of pure U-235 could make a very powerful atomic bomb equivalent to several thousand tonnes of dynamite. They also suggested how such a bomb could be detonated, how the U-235 could be produced, and what the radiation effects might be in addition to the explosive effects. They proposed thermal diffusion as a suitable method for separating the U-235 from the natural uranium. This memorandum stimulated a considerable response in Britain at a time when there was little interest in the USA.

A group of eminent scientists known as the MAUD Committee was set up in Britain and supervised research at the Universities of Birmingham, Bristol, Cambridge, Liverpool and Oxford. The chemical problems of producing gaseous compounds of uranium and pure uranium metal were studied at Birmingham University and Imperial Chemical Industries (ICI). Dr Philip Baxter at ICI made the first small batch of gaseous uranium hexafluoride for Professor James Chadwick in 1940. ICI received a formal contract later in 1940 to make 3kg of this vital material for the future work. Most of the other research was funded by the universities themselves.

Two important developments came from the work at Cambridge. The first was experimental proof that a chain reaction could be sustained with slow neutrons in a mixture of uranium oxide and heavy water, ie. the output of neutrons was greater than the input. The second was by Bretscher and Feather based on earlier work by Halban and Kowarski soon after they arrived in Britain from Paris. When U-235 and U-238 absorb slow neutrons, the probability of fission in U-235 is much greater than in U-238. The U-238 is more likely to form a new isotope U-239, and this isotope rapidly emits an electron to become a new element with a mass of 239 and an Atomic Number of 93. This element also emits an electron and becomes a new element of mass 239 and Atomic Number 94, which has a much greater half-life. Bretscher and Feather argued on theoretical grounds that element 94 would be readily fissionable by slow and fast neutrons, and had the added advantages that it was chemically different to uranium and therefore could easily be separated from it.

This new development was also confirmed in independent work by McMillan and Abelson in the USA in 1940. Dr Kemmer of the Cambridge team proposed the names neptunium for the new element # 93 and plutonium for # 94 by analogy with the outer planets Neptune and Pluto beyond Uranus (uranium, element # 92). The Americans fortuitously suggested the same names, and the identification of plutonium in 1941 is generally credited to Glenn Seaborg.

Developing the concepts

By the end of 1940 remarkable progress had been made by the several groups of scientists coordinated by the MAUD Committee and for the expenditure of a relatively small amount of money. All of this work was kept secret, whereas in the USA several publications continued to appear in 1940 and there was also little sense of urgency.

By March 1941 one of the most uncertain pieces of information was confirmed - the fission cross-section of U-235. Peierls and Frisch had initially predicted in 1940 that almost every collision of a neutron with a U-235 atom would result in fission, and that both slow and fast neutrons would be equally effective. It was later discerned that slow neutrons were very much more effective, which was of enormous significance for nuclear reactors but fairly academic in the bomb context. Peierls then stated that there was now no doubt that the whole scheme for a bomb was feasible provided highly enriched U-235 could be obtained. The predicted critical size for a sphere of U-235 metal was about 8kg, which might be reduced by use of an appropriate material for reflecting neutrons. However, direct measurements on U-235 were still necessary and the British pushed for urgent production of a few micrograms.

The final outcome of the MAUD Committee was two summary reports in July 1941. One was on 'Use of Uranium for a Bomb' and the other was on 'Use of Uranium as a Source of Power'. The first report concluded that a bomb was feasible and that one containing some 12 kg of active material would be equivalent to 1,800 tons of TNT and would release large quantities of radioactive substances which would make places near the explosion site dangerous to humans for a long period. It estimated that for a plant to produce 1kg of U-235 per day it would cost £5 million and would require a large skilled labour force that was also needed for other parts of the war effort. Suggesting that the Germans could also be working on the bomb, it recommended that the work should be continued with high priority in cooperation with the Americans, even though they seemed to be concentrating on the future use of uranium for power and naval propulsion.

The second MAUD Report concluded that the controlled fission of uranium could be used to provide energy in the form of heat for use in machines, as well as providing large quantities of radioisotopes which could be used as substitutes for radium. It referred to the use of heavy water and possibly graphite as moderators for the fast neutrons, and that even ordinary water could be used if the uranium was enriched in the U-235 isotope. It concluded that the 'uranium boiler' had considerable promise for future peaceful uses but that it was not worth considering during the present war. The Committee recommended that Halban and Kowarski should move to the USA where there were plans to make heavy water on a large scale. The possibility that the new element plutonium might be more suitable than U-235 was mentioned, so that the work in this area by Bretscher and Feather should be continued in Britain.

The two reports led to a complete reorganisation of work on the bomb and the 'boiler'. It was claimed that the work of the committee had put the British in the lead and that "in its fifteen months' existence it had proved itself one of the most effective scientific committees that ever existed". The basic decision that the bomb project would be pursued urgently was taken by the Prime Minister, Winston Churchill, with the agreement of the Chiefs of Staff.

The reports also led to high level reviews in the USA, particularly by a Committee of the National Academy of Sciences, initially concentrating on the nuclear power aspect. Little emphasis was given to the bomb concept until 7 December 1941, when the Japanese attacked Pearl Harbour and the Americans entered the war directly. The huge resources of the USA were then applied without reservation to developing atomic bombs.

The Manhattan Project

The Americans increased their effort rapidly and soon outstripped the British. Research continued in each country with some exchange of information. Several of the key British scientists visited the USA early in 1942 and were given full access to all of the information available. The Americans were pursuing three enrichment processes in parallel: Professor Lawrence was studying electromagnetic separation at Berkeley (University of California), E. V. Murphree of Standard Oil was studying the centrifuge method developed by Professor Beams, and Professor Urey was coordinating the gaseous diffusion work at Columbia University. Responsibility for building a reactor to produce fissile plutonium was given to Arthur Compton at the University of Chicago. The British were only examining gaseous diffusion.

In June 1942 the US Army took over process development, engineering design, procurement of materials and site selection for pilot plants for four methods of making fissionable material (because none of the four had been shown to be clearly superior at that point) as well as the production of heavy water. With this change, information flow to Britain dried up. This was a major setback to the British and the Canadians who had been collaborating on heavy water production and on several aspects of the research program. Thereafter, Churchill sought information on the cost of building a diffusion plant, a heavy water plant and an atomic reactor in Britain.

After many months of negotiations an agreement was finally signed by Mr Churchill and President Roosevelt in Quebec in August 1943, according to which the British handed over all of their reports to the Americans and in return received copies of General Groves' progress reports to the President. The latter showed that the entire US program would cost over $1,000 million, all for the bomb, as no work was being done on other applications of nuclear energy.

Construction of production plants for electromagnetic separation (in calutrons) and gaseous diffusion was well under way. An experimental graphite pile constructed by Fermi had operated at the University of Chicago in December 1942 – the first controlled nuclear chain reaction.

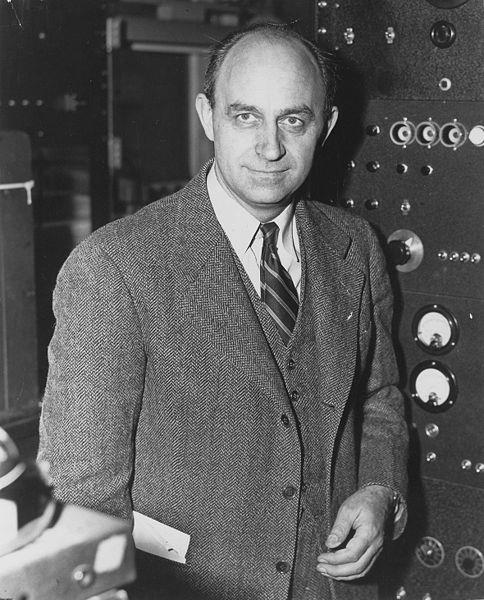

Enrico Fermi, c. 1943-1949 (National Archives and Records Administration)

A full-scale production reactor for plutonium was being constructed at Argonne, with further ones at Oak Ridge and then Hanford, plus a reprocessing plant to extract the plutonium. Four plants for heavy water production were being built, one in Canada and three in the USA. A team under Robert Oppenheimer at Los Alamos in New Mexico was working on the design and construction of both U-235 and Pu-239 bombs. The outcome of the huge effort, with assistance from the British teams, was that sufficient Pu-239 and highly enriched U-235 (from calutrons and diffusion at Oak Ridge) was produced by mid-1945. The uranium mostly originated from the Belgian Congo.

The first atomic device tested successfully at Alamagordo in New Mexico on 16 July 1945. It used plutonium made in a nuclear pile. The teams did not consider that it was necessary to test a simpler U-235 device. The first atomic bomb, which contained U-235, was dropped on Hiroshima on 6 August 1945. The second bomb, containing Pu-239, was dropped on Nagasaki on 9 August. That same day, the USSR declared war on Japan. On 10 August 1945 the Japanese Government surrendered.

The Soviet bomb

Initially Stalin was not enthusiastic about diverting resources to develop an atomic bomb, until intelligence reports suggested that such research was under way in Germany, Britain and the USA. Consultations with Academicians Ioffe, Kapitsa, Khlopin and Vernadsky convinced him that a bomb could be developed relatively quickly and he initiated a modest research program in 1942. Igor Kurchatov, then relatively young and unknown, was chosen to head it and in 1943 he became Director of Laboratory No.2 recently established on the outskirts of Moscow. This was later renamed LIPAN, then became the Kurchatov Institute of Atomic Energy. Overall responsibility for the bomb program rested with Security Chief Lavrenti Beria and its administration was undertaken by the First Main Directorate (later called the Ministry of Medium Machine Building).

Research had three main aims: to achieve a controlled chain reaction; to investigate methods of isotope separation; and to look at designs for both enriched uranium and plutonium bombs. Attempts were made to initiate a chain reaction using two different types of atomic pile: one with graphite as a moderator and the other with heavy water. Three possible methods of isotope separation were studied: counter-current thermal diffusion, gaseous diffusion and electromagnetic separation.

After the defeat of Nazi Germany in May 1945, German scientists were "recruited" to the bomb program to work in particular on isotope separation to produce enriched uranium. This included research into gas centrifuge technology in addition to the three other enrichment technologies.

The test of the first US atomic bomb in July 1945 had little impact on the Soviet effort, but by this time, Kurchatov was making good progress towards both a uranium and a plutonium bomb. He had begun to design an industrial scale reactor for the production of plutonium, while those scientists working on uranium isotope separation were making advances with the gaseous diffusion method.

It was the bombing of Hiroshima and Nagasaki the following month which gave the program a high profile and construction began in November 1945 of a new city in the Urals which would house the first plutonium production reactors -- Chelyabinsk-40 (Later known as Chelyabinsk-65 or the Mayak production association). This was the first of ten secret nuclear cities to be built in the Soviet Union. The first of five reactors at Chelyabinsk-65 came on line in 1948. This town also housed a processing plant for extracting plutonium from irradiated uranium.

As for uranium enrichment technology, it was decided in late 1945 to begin construction of the first gaseous diffusion plant at Verkh-Neyvinsk (later the closed city of Sverdlovsk-44), some 50 kilometres from Yekaterinburg (formerly Sverdlovsk) in the Urals. Special design bureaux were set up at the Leningrad Kirov Metallurgical and Machine-Building Plant and at the Gorky (Nizhny Novgorod) Machine Building Plant. Support was provided by a group of German scientists working at the Sukhumi Physical Technical Institute.

In April 1946 design work on the bomb was shifted to Design Bureau-11 – a new centre at Sarova some 400 kilometres from Moscow (subsequently the closed city of Arzamas-16). More specialists were brought in to the program including metallurgist Yefim Slavsky who was given the immediate task of producing the very pure graphite Kurchatov needed for his plutonium production pile constructed at Laboratory No. 2 known as F-1. The pile was operated for the first time in December 1946. Support was also given by Laboratory No.3 in Moscow – now the Institute of Theoretical and Experimental Physics – which had been working on nuclear reactors.

Work at Arzamas-16 was influenced by foreign intelligence gathering and the first device was based closely on the Nagasaki bomb (a plutonium device). In August 1947 a test site was established near Semipalatinsk in Kazakhstan and was ready for the detonation two years later of the first bomb, RSD-1. Even before this was tested in August 1949, another group of scientists led by Igor Tamm and including Andrei Sakharov had begun work on a hydrogen bomb.

Revival of the 'nuclear boiler'

By the end of World War II, the project predicted and described in detail only five and a half years before in the Frisch-Peierls Memorandum had been brought to partial fruition, and attention could turn to the peaceful and directly beneficial application of nuclear energy. Post-war, weapons development continued on both sides of the "iron curtain", but a new focus was on harnessing the great atomic power, now dramatically (if tragically) demonstrated, for making steam and electricity.

In the course of developing nuclear weapons the Soviet Union and the West had acquired a range of new technologies and scientists realised that the tremendous heat produced in the process could be tapped either for direct use or for generating electricity. It was also clear that this new form of energy would allow development of compact long-lasting power sources which could have various applications, not least for shipping, and especially in submarines.

The first nuclear reactor to produce electricity (albeit a trivial amount) was the small Experimental Breeder reactor (EBR-1) designed and operated by Argonne National Laboratory and sited in Idaho, USA. The reactor started up in December 1951.

In 1953 President Eisenhower proposed his "Atoms for Peace" program, which reoriented significant research effort towards electricity generation and set the course for civil nuclear energy development in the USA.

In the Soviet Union, work was under way at various centres to refine existing reactor designs and develop new ones. The Institute of Physics and Power Engineering (FEI) was set up in May 1946 at the then-closed city of Obninsk, 100 km southwest of Moscow, to develop nuclear power technology. The existing graphite-moderated channel-type plutonium production reactor was modified for heat and electricity generation and in June 1954 the world's first nuclear powered electricity generator began operation at the FEI in Obninsk. The AM-1 (Atom Mirny – peaceful atom) reactor was water-cooled and graphite-moderated, with a design capacity of 30 MWt or 5 MWe. It was similar in principle to the plutonium production reactors in the closed military cities and served as a prototype for other graphite channel reactor designs including the Chernobyl-type RBMK (reaktor bolshoi moshchnosty kanalny – high power channel reactor) reactors. AM-1 produced electricity until 1959 and was used until 2000 as a research facility and for the production of isotopes.

Also in the 1950s FEI at Obninsk was developing fast breeder reactors (FBRs) and lead-bismuth reactors for the navy. In April 1955 the BR-1 (bystry reaktor – fast reactor) fast neutron reactor began operating. It produced no power but led directly to the BR-5, which started up in 1959 with a capacity of 5 MWt, and which was used to do the basic research necessary for designing sodium-cooled FBRs. It was upgraded and modernized in 1973 and then underwent major reconstruction in 1983 to become the BR-10 with a capacity of 8 MWt which is now used to investigate fuel endurance, to study materials and to produce isotopes.

The main US effort was under Admiral Hyman Rickover, which developed the pressurized water reactor (PWR) for naval (particularly submarine) use. The PWR used enriched uranium oxide fuel and was moderated and cooled by ordinary (light) water. The Mark 1 prototype naval reactor started up in March 1953 in Idaho, and the first nuclear-powered submarine, USS Nautilus, was launched in 1954. In 1959 both USA and USSR launched their first nuclear-powered surface vessels.

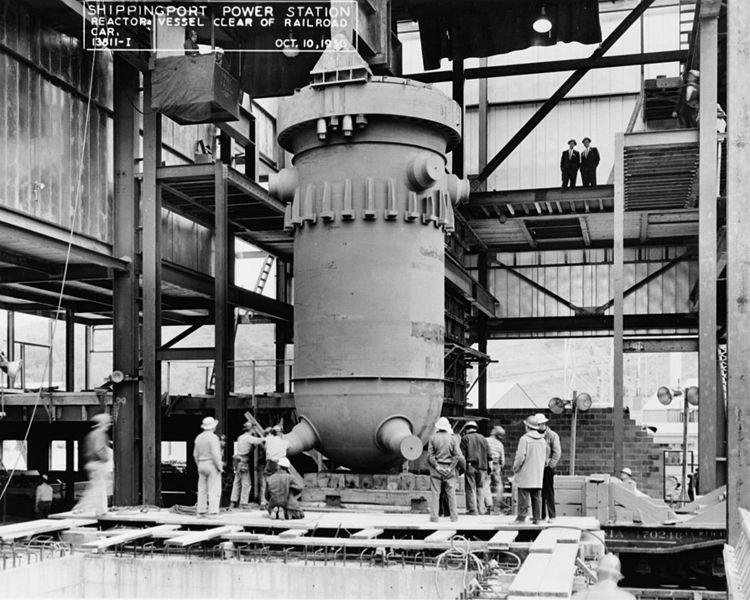

The Mark 1 reactor led to the US Atomic Energy Commission building the 60 MWe Shippingport demonstration PWR reactor in Pennsylvania, which started up in 1957 and operated until 1982.

Installation of reactor vessel at Shippingport, the first commercial US nuclear power plant (US Library of Congress)

Since the USA had a virtual monopoly on uranium enrichment in the West, British development took a different tack and resulted in a series of reactors fuelled by natural uranium metal, moderated by graphite, and gas-cooled. The first of these 50 MWe Magnox types, Calder Hall 1, started up in 1956 and ran until 2003. However, after 1963 (and 26 units) no more were commenced. Britain next embraced the advanced gas-cooled reactor (using enriched oxide fuel) before conceding the pragmatic virtues of the PWR design.

Nuclear energy goes commercial

In the USA, Westinghouse designed the first fully commercial PWR of 250 MWe, Yankee Rowe, which started up in 1960 and operated to 1992. Meanwhile the boiling water reactor (BWR) was developed by the Argonne National Laboratory, and the first one, Dresden-1 of 250 MWe, designed by General Electric, was started up earlier in 1960. A prototype BWR, Vallecitos, ran from 1957 to 1963. By the end of the 1960s, orders were being placed for PWR and BWR reactor units of more than 1000 MWe.

Canadian reactor development headed down a quite different track, using natural uranium fuel and heavy water as a moderator and coolant. The first unit started up in 1962. This CANDU design continues to be refined.

France started out with a gas-graphite design similar to Magnox and the first reactor started up in 1956. Commercial models operated from 1959. It then settled on three successive generations of standardized PWRs, which was a very cost-effective strategy.

In 1964 the first two Soviet nuclear power plants were commissioned. A 100 MW boiling water graphite channel reactor began operating in Beloyarsk (Urals). In Novovoronezh (Volga region) a new design – a small (210 MW) pressurized water reactor (PWR) known as a VVER (veda-vodyanoi energetichesky reaktor– water cooled power reactor) – was built.

The first large RBMK (1,000 MW – high-power channel reactor) started up at Sosnovy Bor near Leningrad in 1973, and in the Arctic northwest a VVER with a rated capacity of 440 MW began operating. This was superseded by a 1000 MWe version which became a standard design.

In Kazakhstan the world's first commercial prototype fast neutron reactor (the BN-350) started up in 1972 with a design capacity of 135 MWe (net), producing electricity and heat to desalinate Caspian seawater. In the USA, UK, France and Russia a number of experimental fast neutron reactors produced electricity from 1959, the last of these closing in 2009. This left Russia's BN-600 as the only commercial fast reactor, until joined by a BN-800 in 2016.

Around the world, with few exceptions, other countries have chosen light-water designs for their nuclear power programmes, so that today 69% of the world capacity is PWR and 20% BWR.

The nuclear power brown-out and revival

From the late 1970s to about 2002 the nuclear power industry suffered some decline and stagnation. Few new reactors were ordered, the number coming on line from mid 1980s little more than matched retirements, though capacity increased by nearly one third and output increased 60% due to capacity plus improved load factors. The share of nuclear in world electricity from mid 1980s was fairly constant at 16-17%. Many reactor orders from the 1970s were cancelled. The uranium price dropped accordingly, and also because of an increase in secondary supplies. Oil companies which had entered the uranium field bailed out, and there was a consolidation of uranium producers.

However, by the late 1990s the first of the third-generation reactors was commissioned – Kashiwazaki-Kariwa 6 – a 1350 MWe Advanced BWR, in Japan. This was a sign of the recovery to come.

In the new century several factors combined to revive the prospects for nuclear power. First was the realisation of the scale of projected increased electricity demand worldwide, but particularly in rapidly-developing countries. Secondly was the awareness of the importance of energy security – the prime importance of each country having assured access to affordable energy, and particularly to dispatchable electricity able to meet demand at all times. Thirdly was the need to limit carbon emissions due to concerns about climate change.

These factors coincided with the availability of a new generation of nuclear power reactors, and in 2004 the first of the late third-generation units was ordered for Finland – a 1600 MWe European PWR (EPR). A similar unit is being built in France, and two new Westinghouse AP1000 units are under construction in the USA.

But plans in Europe and North America are overshadowed by those in Asia, particularly China and India. China alone plans and is building towards a huge increase in nuclear power capacity by 2030, and has more than one hundred further large units proposed and backed by credible political determination and popular support. Many of these are the latest Western design, or adaptations thereof. Others are substantially local designs.

The history of nuclear power thus starts with science in Europe, blossoms in the UK and USA with the latter's technological and economic might, languishes for a few decades, then has a new growth spurt in east Asia. In the process, over 17,000 reactor-years of operation have been accumulated in providing a significant proportion of the world’s electricity.

Notes & references

General sources

Atomic Rise and Fall, the Australian Atomic Energy Commission 1953-1987, by Clarence Hardy, Glen Haven, 1999. Chapter 1 provides the major source for 1939-45

Radiation in Perspective, OECD NEA, 1997

Nuclear Fear, by Spencer Weart, Harvard UP, 1988

Judith Perera (Russian material)

Alexander Petrov, ITER Domestic Agency of the Russian Federation, A Short History of the Ioffe Institute

Mark Walker, Nazis and the Bomb, NOVA (November 2005)

Carl H. Meyer and Günter Schwarz, The Theory of Nuclear Explosives that Heisenberg did not Present to the German Military, Max Planck Institute for the History of Science, Preprint #467 (2015)

Related information

Nuclear Power ReactorsPlans For New Reactors Worldwide